Case Study / APP STORE OPTIMIZATION

How A/B testing and experimenting can provide validating insights for your future ASO strategy

Background:

- To increase the COUP e-scooter app’s organic visibility,

- Increase its conversion rate (CVR) to make the boosted visibility turn into more installs.

The problem:

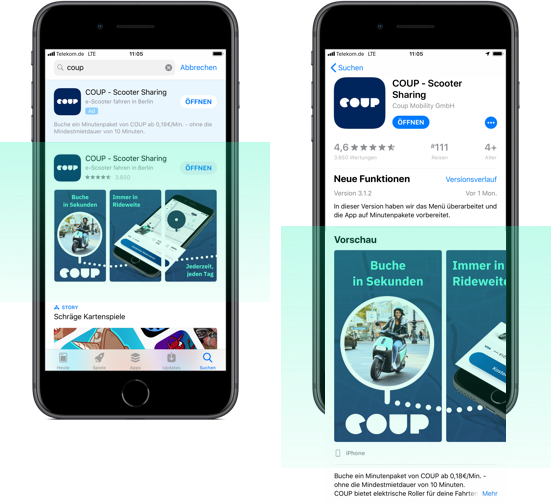

When we kicked off our ASO project with COUP, their original set of screenshots was already good. It came in a white background and a clean, modern and clear design. The strategy in place at the time was minimalistic, straightforward and honest. The idea was to technically explain to users what COUP as a shared e-scooter service provider can do.

However, we felt the technicality might have left out a large number of potential customers of COUP. In our experience, business-to-consumer (B2C) brands like COUP tend to attract more users via emotional, dynamic design or otherwise communication approaches rather than technical. We also had multiple ideas in mind as to what alternative approaches may have looked like. What was missing is a reliable way to identify what would work best – and that’s where AB testing came in.

So, here are the screenshots they were using...

The solution:

We developed three communication strategies with COUP’s app store screenshots to test against the original version. We ran them in three tests, each for one month. We wanted to make sure things would be kept consistent and the traffic each variant would receive was highest possible (an ABCD test would allow each variant only 25% traffic).

Our strategy in this project is not to quickly generate tactical level learnings or quick results – that we can do later – but rather the strategic, high-level insights that help us validate a whole roadmap of AB tests to come in the future.

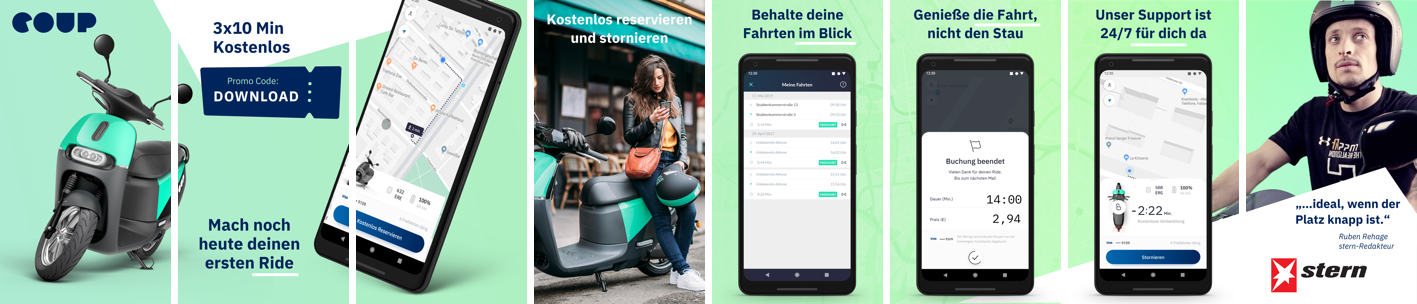

Let’s have a look at our new test variants created by Customlytics:

Test 1

The concept behind this is a journey that users can receive from COUP, as in “the fastest way from A to B”. The background is a replicated traffic map from the app, and it symbolizes the journey. There are also iconic representations of three main cities that COUP supported, namely Berlin, Paris and Madrid, which says more with less words. We also increased the contrast between the background and the captions, so it would be more legible.

Test 2

Test 3

The results:

The initial result after we tested variant #1 against the original version (the Control variant) was very positive. According to statistics from Google Play Store Listing Experiment (the primary AB testing platform we use), Test variant #1 would be 20% more effective in CRO than the Control – which is already a major achievement.

With this result, here is what we did next:

- We replaced the Control variant screenshots with the Test variant #1 ones.

- We prepared for the second round with Test variant #2, which would then be tested against Test variant #1.

The result of the second test was a loss. This means Test variant #1 performed better than #2. However, we treated this as a learning and not a failure – it helped us rethink priorities in COUP’s CRO strategy. For instance, we would now prioritize the blue brand color over the green and focus on the storytelling of the journey instead of the promotions.

Next, we produced Test variant #3 and ran it against #1. Similar to the second test, the result was a loss – except the margin was larger. This helped us eliminate most of the doubts we had – now we knew “quirky” wasn’t meant for COUP, and it would be best to avoid this approach.

Conclusion:

After a three-month AB testing project, we managed to help our client COUP learn a lot about their market and users. We also helped them establish a foundation for CRO. In shorts, our results include: